Over the past year, AI technologies have rapidly evolved and become widely adopted across numerous industries, leading to transformative impacts and a broad array of innovations.

Microsoft Build 2023 brought forth a wealth of insights on building and maintaining a company AI Copilot. One session, hosted by CTO of Azure Machine Learning Greg Buehrer, focused on the power of Azure Machine Learning (ML) and new tools within Azure designed to help companies develop and run their own “Copilots.” Microsoft defines a “Copilot” as a chatbot app that uses AI—typically text-generating or image-generating AI—to assist with tasks like writing a sales pitch or generating images for a presentation.

Buehrer cited SynerAI as an early example of an enterprise leveraging OpenAI and natural language processing (NLP) along with their own custom integrations to process data and generate valuable consumer insights, particularly around market sentiment.

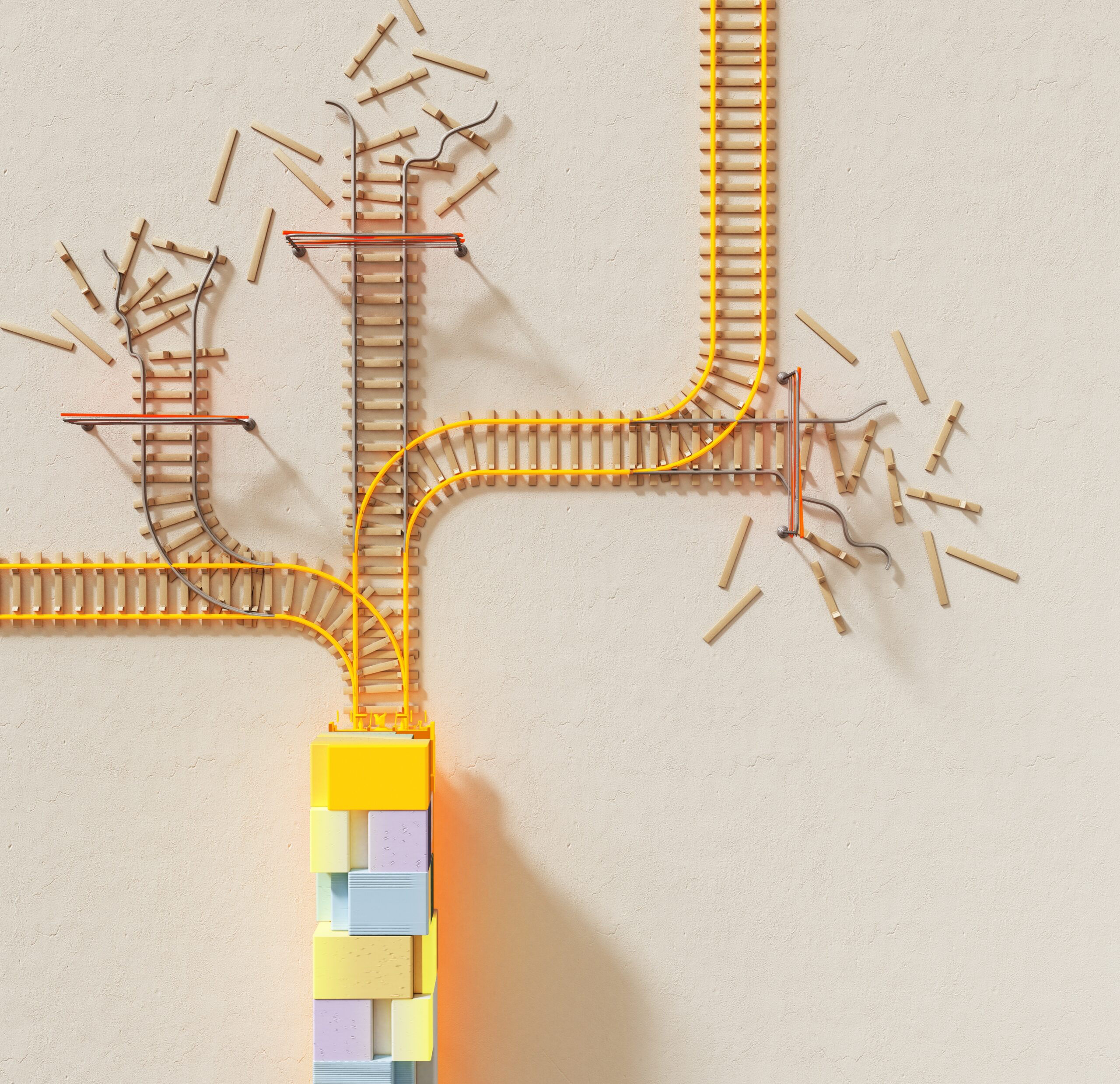

The power behind these innovative applications lies within the AI infrastructure stack: Azure ML, cognitive services, and applied AI services operating together and on top of one another. The Azure OpenAI service, equipped with GPT-3, Codex, DALL-E, and a GPT-4 preview version, facilitates deployment on custom datasets and provisions, allowing for seamless integration with Azure’s plugin ecosystem.

Azure Machine Learning takes center stage in this stack by empowering generative AI applications. It introduces Azure-native capabilities like prompt engineering and evaluation, the proprietary Prompt flow suite, and principles like responsible AI and Azure Content Safety. With these at your disposal, high-scale generative AI applications, model monitoring, and app deployment become increasingly manageable.

Large language models (LLMs), at their core, use a fixed number of tokens or words to retrieve the next token iteratively. Demonstrations by Seth Juarez and Daniel Schneider in the Azure AI Studio via Chat playground highlighted this functionality. They showcased how using a system message to prompt the Copilot can set behavior and parameters that dictate output. For instance, when asked to behave as an authority on the Eurovision competition, the model responded aptly, while questions regarding any other subject were answered simply with the output “Salsa.”

A fascinating element of these models is Retrieval Augmented Engineering (RAG). RAG allows models to be manually fed with recent information, producing outputs based on data beyond the model’s original training set. With the previous Eurovision Copilot, though it did not have current enough data to know the 2023 winner, the Copilot was fed information from the current Wikipedia article, and then was able to answer questions related to that information.

The session featured a customer service Copilot demo for Contoso, an outdoor supply company. Contoso’s customer service backend, powered by an Azure-built Copilot and written in Python and LangChain, provides customer-specific and product-related information based on user queries. This process, which was also developed using Azure’s Prompt flow, offers significant value in enhancing customer service interactions.

With this specific Copilot, a customer asks a question in a chat box, which is used as an input that filters through a series of chains and indexes to get useful, specific output (e.g., the climate rating on a sleeping bag). The customer service representative can then use these outputs to structure their response to the customer. The beauty of this Copilot-based approach is that it utilizes AI for speed and accuracy without removing the element of human interaction.

Azure also allows the deployment of a Copilot to authenticated endpoints and for monitoring of app performance for data insights. Furthermore, a bulk test feature permits extensive testing of multiple prompts at once, providing a measure of output alignment with desired objectives.

The new GPT-4 model can be used to score Azure-built Copilots in terms of ‘groundedness‘—the accuracy with which an LLM produces responses based on unique datasets, such as information specific to your business. Further, advanced data science capabilities like creating vector indexes to inform your custom model are provided by Azure’s Prompt flow. To monitor and enhance your model’s performance continually, Azure offers dashboards for tracking metrics like groundedness. Moreover, a model catalog facilitates utilization and fine-tuning of a variety of models, including GPT-3 sub-models like Davinci, Curie, Babbage, Ada, and others.

Notably, these Azure AI applications extend beyond just chats. The ability to transcribe phone conversations, for instance, widens the potential for data analysis and customer service improvement as mentioned by the presenters.

To summarize, Azure ML and GPT-4 present an unparalleled toolkit for creating and maintaining corporate AI Copilots. As AI technology continues its rapid evolution, it stands ready to initiate transformative shifts in operations and customer service experiences. This is a pivotal moment for organizations to leverage these sophisticated capabilities and align themselves with the fast-paced digital shift. So why wait? Start integrating Azure ML and GPT-4 into your business strategy today. Propel your organization forward, leading with innovation and enhanced customer experiences, and drive the change you wish to see in your industry.

by

by