Looking Back at 2025: A Year of Metamorphosis

When I sat down to think about all the things I could write about to sum up the year, it immediately felt overwhelming. So much has changed about our organization; the customers we serve, the product...

When we look back on 2024 regarding technology advancements and changes, there is likely one prevalent topic that will continue to pop up: AI. This year has been defined–and perhaps even redefined–by the explosion of the GPT landscape, which began in the second half of 2023.

As the features, functionality, and use cases for AI continue to grow and evolve, we are already seeing an influx of new terminologies associated with these models. Retrieval Augmented Generation (RAG) the ability to have a GPT model reach out to external data sources to provide more comprehensive responses, has dominated deployments. So too have few-shot learning, Semantic Kernel, and APIM (API Management)–tools rapidly redefining how AI models are built and interacted with.

Along the same vein is a fourth term that has been growing as the year has progressed: Fine-Tuning. Fine-Tuning sits at the far edge of GPT model specialization, when efforts involving RAG, few-shot learning, and system prompts don’t quite achieve the intended results. What is Fine-Tuning specifically, then? Fine-Tuning is the process of training a new GPT model from an existing model using a set of training data, to specialize the model’s output to the desired dataset.

When then does it make sense to use Fine-Tuning? There are several areas where Fine-Tuning could yield a highly specialized model that outperforms an out-of-the-box model. The most obvious use case surrounds private internal data that is written in domain-specific language, such as healthcare or finance. In these cases, Fine-Tuning allows you to build a model that is trained specifically on your organization’s data, the proper vernacular, context, and even tone. This creates an experience that is highly specialized, and would be hard, if not impossible, to match with a standard model. Note that it does not have be a RAG supported model or Fine-Tuned model–great success can be achieved in combining a Fine-Tuned model with RAG capabilities.

Additionally, Fine-Tuning can be used for cost optimization when training a smaller model on your dataset, allowing it to perform more quickly, efficiently, and cheaper than a standard model. Consider a scenario where you deploy a standard GPT-4o model and provide it with a system prompt and few-shot learning examples, but the results are not ideal. Rather than retaining higher cost-per-token for a less than desirable experience, you can deploy a GPT-4o Mini model, Fine-Tune it with a strong training dataset, and use that deployment to provide more adequate responses, while saving the difference between the cost of input/output tokens.

Let’s say you’re now interested in looking at applying Fine-Tuning to your own model: how do you accomplish such a task? Well, the process of Fine-Tuning is only as successful as the training dataset you use to Fine-Tune your model. To achieve good Fine-Tuning, you will need a training file that contains hundreds, if not thousands, of prompt/completion pairs, to appropriately train the model on your desired output. That training file is formatted in JSONL. Those pairs also need to be relevant to the desired output of your Fine-Tuned model. Loosely associated pairs will achieve the opposite of good Fine-Tuning and produce a model that is likely weaker than the standard offering.

For more information on how to create an appropriate training file, there is a lot of useful example information at Microsoft’s Fine-Tuning Learn page. The only hard and fast rule with training files is that they must contain at least 10 training examples. However, in reality, you should be aiming for a much higher number of examples. This can be time consuming and quite iterative, but is a task that an existing GPT model can potentially assist with, namely AI training AI.

Figure 1: A segment of a training file that has left the system prompt blank. Note the JSONL syntax.

Once you have a dataset sufficient for your training, you’ll need to train your model of choice. Currently, there are various versions of GPT-3.5, GPT-4, GPT-4o, and 4o-mini that can be Fine-Tuned, as well as Babbage and DaVinci. An additional note here is to ensure that the model you want to Fine-Tune is deployed to a region that supports Fine-Tuning. This varies per model and is not as widely available across Azure regions as the base GPT models are, but more information can be found in Microsoft’s documentation. Even if you don’t plan to Fine-Tune immediately, it’s a good idea to have an Azure OpenAI service deployed to a Fine-Tuning eligible region if you believe you will eventually, to avoid being region-locked from doing so. Once you have selected your model and region, you’ll need to upload your training dataset, which can be accomplished from Azure AI Studio, using the REST API, or the Python SDK.

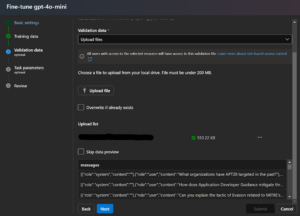

If you want the best training experience, you can also use an optional validation dataset that will perform “checks” after each pass of training, to determine how well the model is being optimized. This is entirely optional, but is easy enough to include, as you can simply remove 20% of your total training data (so, 200 examples from a training dataset of 1,000 examples). This will give you additional metrics to review after training has completed to help determine the overall success of how well the model is now predicting your more specialized information.

Figure 2: Uploading Validation Dataset in Azure AI Studio, as part of the Fine-Tuning Training Process. Previewing the examples allows you to confirm in real time if the examples are what you want to validate with.

Once the training job is kicked off, Azure OpenAI will handle the entirety of the training, which can last anywhere from an hour to a handful of hours, depending on how large the training file associated with the job. Once complete, if you used the Azure AI Studio, you could also review the training metrics to see how the model fared throughout each step of the training, as well as how accurate the Fine-Tuned model becomes when referencing the validation dataset. These metrics can additionally be downloaded as a CSV file, which will show the turn-by-turn growth of the model after each pass of the training dataset. This can be helpful in determining points of diminishing return and potential weak spots in the training dataset; and provide a granular view of how successful (or not) the training may have been.

Figure 3: The Fine-Tuning Metrics page, showing the decreased token loss, and increased token accuracy, over the course of a training.

If you feel comfortable with the results, you can then deploy the Fine-Tuned model, or one of the checkpoints that were created during the training. This allows you to select the most appropriate epoch (generation) of training for your deployment. If you want to further train the model or determine that you want to update your training dataset, Azure AI Studio allows for Continuous Fine-Tuning. This means that you apply a new training dataset to an (or your) existing Fine-Tuned model, further improving its accuracy or increasing the scope of its specialization.

One final note about Fine-Tuning, and how it applies to the newer GPT-4/4o/4o mini models: there is built-in security to prevent the Fine-Tuning of models that include harmful content. This includes the training (and validation) dataset and the deployed model. If, at any time, it appears that the data includes violent, hateful, or unfair content, the ability to further deploy the model is prevented. These built-in guardrails help prevent Fine-Tuned models from existing outside of Microsoft’s, and OpenAI’s, content filtering policies. Furthermore, they prevent organizations from accidentally deploying models that could be compromised with harmful datasets.

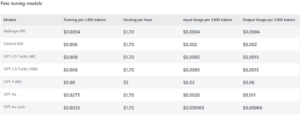

As with all AI associated tasks, there is invariably the question of “How Much?” The good news is that answer is now “less than ever!” In late July, Microsoft restructured their training prices from $/hour to $/token. Previously, training larger models could easily become an expensive affair, but with these pricing changes, even the most robust training efforts are much more affordable. In many cases, these savings are 40-80% from the original per-hour prices.

If that wasn’t enough, Microsoft has also lowered the per-hour hosting price for most of the models that can be Fine-Tuned, making it even more feasible to use your specialized, Fine-Tuned model as your main GPT system. This means that you not only see potential savings from being able to use a smaller model for the same lifting, but you now also benefit from more appropriate pricing when training and utilizing the Fine-Tuned variant moving forward!

Figure 4: North Central US Region Fine-Tuning Prices, courtesy of Microsoft

When comparing Fine-Tuned models to their “out-of-the-box” counterparts, they are still more expensive to run, namely thanks to that $1.70/hour baseline, but the benefits are still there, especially in the case of GPT-4o mini. In its specific case, you can potentially Fine-Tune a Mini model to be as powerful in a particular domain as the standard 4o Global Deployment, while being faster and noticeably cheaper. Ultimately, the deciding factor for many organizations will be a combination of having enough quality training data to appropriately Fine-Tune a model, as well as having a determination that RAG and/or prompt engineering are not sufficient to elicit the desired responses.

As the prevalence of AI continues to grow, so do our capabilities in expanding how specialized models and deployments become. Fine-Tuning won’t be a catch-all for powering up a standard model, but in instances where RAG and prompt engineering alone don’t achieve your targeted results, it provides powerful, pointed training that can highly customize a deployment.

Considering the recent price changes made by Microsoft, and the inclusion of Fine-Tuning for the already cost-efficient Mini GPT models, there is real value in training your own deployment to meet possible industry specific terminology, tone, or details that simply cannot be achieved with out-of-the-box models. Tack on further security safeguards to prevent potentially harmful content from being injected into training materials, and there has never been a better time to give Fine-Tuning a try with your own GPT instance!

“Azure OpenAI Service Models – Azure OpenAI.” Azure OpenAI | Microsoft Learn, learn.microsoft.com/en-us/azure/ai-services/openai/concepts/models#fine-tuning-models. Accessed 11 Sept. 2024.

“Customize a Model with Azure Openai Service – Azure Openai.” Customize a Model with Azure OpenAI Service – Azure OpenAI | Microsoft Learn, learn.microsoft.com/en-us/azure/ai-services/openai/how-to/fine-tuning?tabs=turbo%2Cpython-new&pivots=programming-language-ai-studio. Accessed 11 Sept. 2024.

“Fine Tune GPT-4O on Azure Openai Service.” TECHCOMMUNITY.MICROSOFT.COM, 3 Sept. 2024, techcommunity.microsoft.com/t5/ai-azure-ai-services-blog/fine-tune-gpt-4o-on-azure-openai-service/ba-p/4228693#:~:text=Fine-tuning%20GPT-4o. Accessed 11 Sept. 2024

“Pricing Update: Token Based Billing for Fine Tuning Training 🎉.” TECHCOMMUNITY.MICROSOFT.COM, 4 July 2024, techcommunity.microsoft.com/t5/ai-azure-ai-services-blog/pricing-update-token-based-billing-for-fine-tuning-training/ba-p/4164465. Accessed 11 Sept. 2024

“Save Big on Hosting Your Fine-Tuned Models on Azure OpenAI Service.” TECHCOMMUNITY.MICROSOFT.COM, 1 Aug. 2024, techcommunity.microsoft.com/t5/ai-azure-ai-services-blog/save-big-on-hosting-your-fine-tuned-models-on-azure-openai/ba-p/4195386. Accessed 11 Sept. 2024

When I sat down to think about all the things I could write about to sum up the year, it immediately felt overwhelming. So much has changed about our organization; the customers we serve, the product...

As a consultancy positioning ourselves as the human touch behind tech, it should come as no surprise that our criteria for success aren’t solely demonstrated by profit margins. Just as...

Cloudforce has expanded nebulaONE® operations globally, now serving institutions and companies across the Americas, United Kingdom, Europe, Australia, New Zealand, and beyond. What began as...