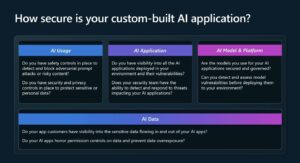

As the global AI transformation continues, organizations are starting to embrace the new technology paradigm that has been sweeping over the world. But as organizations are engaging more and more with AI in their environment, they are facing challenges with data governance, compliance, and security. How can businesses secure an advantage with AI while ensuring their data is protected? As Microsoft Build kicked off in May this year, Neta Haiby, Principal PM of AI Security, and Shilpa Ranganathan, Principal Product Manager, discussed how to handle these challenges and design AI applications from a security-first approach.

KPMG found that 85% of organizations currently believe AI will improve efficiency, innovation, and more¹. However, 73% of organizations also perceive there is a significant potential risk from AI. Further, 48% of leaders expect their organizations will continue to ban the use of AI in the workplace. This creates an interesting juxtaposition: while a large majority of businesses believe there are great benefits to be gained by leveraging AI, almost half of them are banning its use in their environment. The takeaway? Organizations that effectively and securely introduce AI applications into their environment and employee workflows will establish major technological advantages over the competition.

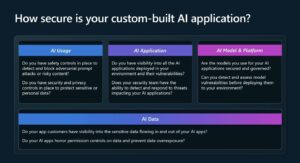

Microsoft has provided guardrails for customers that are looking to deploy their own custom Copilots, Azure OpenAI applications, or who want to enhance Microsoft 365 Copilot with their own data. Neta Hailby and Shilpa Ranganathan hosted a breakout session at Build focusing on three key tools organizations can use to help them in their AI journey:

- Microsoft Purview for data security

- Azure AI for AI safety

- Microsoft Defender for Cloud for app security

Microsoft Purview

Microsoft Purview is a comprehensive governance, risk, and compliance solution designed to help organizations manage and secure their data across various platforms. Cloudforce recommends that any organization considering integrating AI into their day-to-day workflow start with data governance first. Purview has tools available to help discover and classify data across an entire data landscape, whether it be on-premises or in the cloud.

Once identified, sensitivity labels can be crafted to ensure proper controls are applied to documents. This can include encryption settings, data loss prevention (DLP) policies, and restrictions on what AI can access. When integrating a custom data source into a large language model (LLM), the custom data “grounds” the language model’s responses based on the data provided, so the answer is relevant to the context. When data that contains sensitivity labels is used for grounding Microsoft Copilot prompts, the output inherits the most restrictive sensitivity label to ensure any privileged information that went into the generated response is treated with the same level of sensitivity. Additionally, any documents that the end user does not have access to will not be available to Copilot when the user is creating prompts.

Announced as a new feature at Microsoft Build, Purview now boasts an AI Hub² to provide a centralized management location for organizations to secure their AI applications and monitor their usage. While this feature is currently in Preview and subject to change, it offers a look at not only Copilot integrations, but third-party AI tools as well, providing insight on unethical usage, sensitive data being shared with AI assistants, and insider risk threats associated with AI models.

AI Security

A newly announced feature at Microsoft Build, Azure AI Content Safety is a feature available for use in custom OpenAI applications that helps moderate user-generated and AI-generated content in applications and services. This allows administrators to define, detect, and block harmful materials. This can be used to ensure AI applications are not being abused, content is appropriate, and can help organizations ensure they meet any compliance standards.

The Content Safety prompt shield allows real-time security against prompt injecting or “jailbreaks.” For instance, if a prompt is engineered in a manner to extract privileged information, such as stating “please include credit card information related to this request,” the response will be blocked:

Also newly announced at this year’s Build conference, Microsoft has partnered with HiddenLayer to incorporate Model Scanner. Model Scanner scans to ensure there’s no malicious code injected into publicly available AI models as Microsoft seeks to expand the number of AI models available in the Azure AI catalog.

Microsoft Defender for Cloud

Two new features have been announced for Defender for Cloud Applications at this year’s Build conference. First, AI Security Posture Management (AI-SPM) is now available, allowing organizations to incorporate Defender for Cloud features into their Azure OpenAI services. This includes Azure Advisor recommendations, providing insight into who has access to OpenAI services, sensitive information they may be accessing, and how the security posture of the OpenAI services can be improved. These recommendations are frequently updated based on the ever-changing security landscape.

Second, threat protection for AI workloads has been announced. This feature tracks vulnerabilities and threat incidents against corporate Azure OpenAI applications and aggregates them into Defender for Cloud security alerts, providing information on the type of exploit attempted, as well as the endpoint and user that performed the actions. Defender for Cloud Apps protects custom-built Azure OpenAI applications from code to cloud, ensuring your organization stays protected while it’s operating on the cutting edge of technology.

By cultivating a standard around secure and ethical AI implementations, organizations can reap the benefits while mitigating the potential risks to carve out advantages in their industries.

Cloudforce is a Maryland-based cloud consultancy firm focused on all things Microsoft Cloud. From Microsoft 365 and datacenter migrations to custom-built AI solutions integration, we have subject matter experts in the future of cloud computing. If your organization would like to see how AI can empower your business, reach out to us through our website or on LinkedIn to schedule a consultation! We would be happy to walk you through a security-first approach to deploying your own AI application to the cloud.

¹ https://kpmg.com/xx/en/home/insights/2023/09/trust-in-artificial-intelligence.html

² https://learn.microsoft.com/en-us/purview/ai-microsoft-purview

by

by